The goal of this assignment is to practice reading query execution plans and optimizing queries.

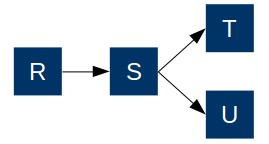

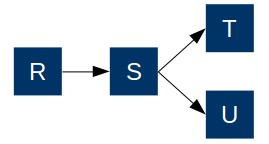

You will be working with four tables, R, S, T, U, connected by foreign

keys:

The DDL creating these tables is as follows:

create table U(u_id int not null primary key,

u_5 int,

u_filler varchar)

create table T(t_id int not null primary key,

t_5 int,

t_filler varchar)

create table S(s_id int not null primary key,

s_4 int,

s_20 int,

s_filler varchar,

t_id int references T,

u_id int references U)

create table R(r_id int not null primary key,

r_2 int,

r_10 int,

r_1p int,

r_10p int,

r_50p int,

r_filler varchar,

s_id int references S)

Row counts are as follows:

The column naming scheme describes the selectivity of the columns. As you know, that information is crucial for understanding performance and optimizing queries.

So, for example:

| select count(*) from r where r_2 = 0 |

2 |

| select count(*) from r where r_10 = 0 |

10 |

| select count(*) from r where r_1p = 0 |

20,000 |

| select count(*) from r where r_10p = 0 |

200,000 |

psql -h postgres.cs.tufts.edu < loaddb.sql

If you are using a postgres database on your own computer, you don't need the -h postgres.cs.tufts.edu arguments.

This should take under 2 minutes to run. (25 seconds on my laptop, probably slower on the homework and postgres servers.) You should only need to run this once, because to work on this assignment, you will not be modifying data. You will be adding and dropping indexes, but those are easily reversible changes.

To verify that the database is set up correctly, check the row counts, (e.g. select count(*) from r). The expected counts are given above.

I strongly suggest that you work on each query by putting the explains, index creation, and index deletion steps in a script. You will spend far too much time typing and getting things subtly wrong otherwise.

For example, suppose that I am working on this query:

select *

from s

where s_10p between 1 and 2

I want to examine the cost without an index, and with. I would

create a script, test.sql as follows:

select 'ORIGINAL -- NO INDEXES';

explain

select *

from r

where r_10p between 1 and 2;

select 'ADD INDEX ON r_10P';

create index on r(r_10p);

analyze r;

explain

select *

from r

where r_10p between 1 and 2;

drop index r_r_10p_idx;

Note the following:

jao=# \d r

Table "public.r"

Column | Type | Modifiers

----------+-------------------+-----------

r_id | integer | not null

r_2 | integer |

r_10 | integer |

r_1p | integer |

r_10p | integer |

r_50p | integer |

r_filler | character varying |

s_id | integer |

Indexes:

"r_pkey" PRIMARY KEY, btree (r_id)

"r_r_10p_idx" btree (r_10p)

"r_r_10p_idx1" btree (r_10p)

"r_r_10p_idx2" btree (r_10p)

Foreign-key constraints:

"r_s_id_fkey" FOREIGN KEY (s_id) REFERENCES s(s_id)

Don't worry about the time taken by all the creation and deletion of indexes. The largest table has only 2M rows, and these steps will run quickly, (1-2 seconds).

I run my script as follows:

psql < test.sql > test.out

Here is the output (in test.out):

Pager usage is off.

?column?

------------------------

ORIGINAL -- NO INDEXES

(1 row)

QUERY PLAN

-----------------------------------------------------------

Seq Scan on r (cost=0.00..46667.00 rows=402200 width=39)

Filter: ((r_10p >= 1) AND (r_10p <= 2))

(2 rows)

?column?

--------------------

ADD INDEX ON r_10P

(1 row)

CREATE INDEX

ANALYZE

QUERY PLAN

----------------------------------------------------------------------------------

Bitmap Heap Scan on r (cost=8532.24..31228.24 rows=401933 width=39)

Recheck Cond: ((r_10p >= 1) AND (r_10p <= 2))

-> Bitmap Index Scan on r_r_10p_idx (cost=0.00..8431.76 rows=401933 width=0)

Index Cond: ((r_10p >= 1) AND (r_10p <= 2))

(4 rows)

DROP INDEX

I notice the following:

Plans print costs like this:

HashAggregate (cost=

The first red number is the cost to produce the first row, and the second one is the cost to produce the entire query result.

In all cases, aim to optimize the time to produce the entire query result, not just the first row.

For each question, submit the .sql file used to run explain, trying various indexing strategies. Also submit the output from each script. For the first question, put your written answers in q1.txt. For the remaining questions, include a text file identifying: 1) the set of indexes that led to the cheapest plan, (not all indexes, just the ones used in the cheapest plan); 2) the cheapest plan; and 3) the cost of that plan. If you just include explain output, that will include the plan and its cost. I.e., include qi.sql, qi.out, qi.txt for i = 1..7.

You should see three different plans.

Create one or more indexes to get the best possible performance for this query:

select * from r

where r_1p = 0

and r_10p = 0

and r_50p = 0

Create one or more indexes to get the best possible performance for this query:

select * from r

where r_1p between 0 and 5

and r_10p = 0

and r_50p = 0

Create one or more indexes to get the best possible performance for this query:

select t.*

from t

join s using (t_id)

join r using (s_id)

join u using (u_id)

where u_5 = 0

Create one or more indexes to get the best possible performance for this query:

select r_10, s_20

from r

join s using (s_id)

where r_50p = 0

and s_4 = 0

This time, consider indexing strategies as usual, but also consider rewriting

the query to obtain a query that can be optimized better.

select *

from r

where r_1p = 0

union

select *

from r

where r_10 = 0

As with #6, consider both indexing strategies, and rewriting the query.

select *

from r

where s_id in (

select s_id

from s

where s_20 = 0

and t_id in (

select t_id

from t

where t_5 = 0

)

)

Here are the lowest cost estimates I was able to obtain.

(Postgres 10.6) |

(Postgres 9.2.24) |

|

| Q2 | 2963.11 | 3274.77 |

| Q3 | 11620.25 | 12372.42 |

| Q4 | 69.29 | 107.09 |

| Q5 | 117.69 | 118.11 |

| Q6 | 18286.34 | 18321.37 |

| Q7 | 110.02 | 109.17 |